Transatlantic AI Divide: Trump Administration Vs. European AI Rulebook

Table of Contents

The Trump Administration's Approach to AI: A Hands-Off Strategy

The Trump administration's AI policy was characterized by a hands-off, deregulatory approach, prioritizing AI innovation and minimizing government intervention. This strategy aimed to foster a robust US AI development sector by allowing private companies maximum freedom in their research and deployment of AI technologies.

- Emphasis on fostering innovation through minimal government intervention: The administration believed that excessive regulation could stifle innovation and hinder the growth of the AI industry.

- Focus on promoting AI development in the private sector: The primary focus was on supporting private sector initiatives, rather than directing significant public funding towards AI research (although some funding did exist).

- Limited focus on ethical considerations and data privacy: Concerns regarding algorithmic bias, discrimination, and data privacy were largely addressed through voluntary initiatives and industry self-regulation, rather than mandatory government oversight. This reflects the broader stance of the administration on deregulation.

- Potential drawbacks: This laissez-faire approach resulted in a lack of consumer protection regarding AI applications and an increased risk of AI misuse. There was also a lack of comprehensive national strategy to address the ethical implications of rapid AI deployment.

- Examples of policies or lack thereof: Notable is the absence of any comprehensive federal AI legislation during the Trump administration. While there were some agency-specific initiatives related to AI applications in areas like defense, there was no overarching national strategy.

Consequences of the Laissez-Faire Approach

The lack of strong AI regulation during the Trump administration led to several significant consequences:

- Increased risk of algorithmic bias and discrimination: Without clear guidelines and oversight, the potential for biased algorithms in critical sectors like lending, hiring, and criminal justice increased significantly.

- Potential for market monopolies by large tech companies: Limited regulation allowed large tech companies to consolidate their dominance in the AI market, potentially reducing competition and innovation.

- Limited international cooperation on AI standards: The lack of a clearly defined national AI policy hindered international cooperation on the development of global AI standards and norms.

The European Union's AI Act: A Risk-Based Approach

In stark contrast to the US approach, the European Union has adopted a more proactive and comprehensive approach to AI regulation with the European AI Act. This legislation takes a risk-based approach, classifying AI systems according to their potential risks and applying different levels of regulation accordingly.

- Comprehensive legislation addressing various AI risks: The AI Act aims to address a wide range of risks associated with AI systems, including those related to safety, security, privacy, and human rights.

- Classification of AI systems based on risk levels: AI systems are categorized into unacceptable risk, high risk, limited risk, and minimal risk. This risk assessment framework determines the level of regulatory scrutiny applied to each system.

- Stricter regulations for high-risk AI systems: High-risk AI systems, such as those used in healthcare, law enforcement, and critical infrastructure, face the strictest regulations. This includes rigorous testing, transparency requirements, and human oversight mechanisms.

- Emphasis on transparency, accountability, and human oversight: The AI Act emphasizes the importance of transparency in AI algorithms and decision-making, as well as the need for human oversight to ensure accountability.

- Alignment with the GDPR (General Data Protection Regulation): The AI Act is designed to be compatible with the GDPR, ensuring that data privacy is protected in the development and deployment of AI systems.

Key Features of the European AI Act

The European AI Act includes several key features:

- Requirements for data governance and transparency: Strict rules for data collection, processing, and usage are essential to ensure accountability and fairness.

- Provisions for human oversight and intervention: Mechanisms ensure human involvement in high-stake decisions made by AI systems.

- Mechanisms for enforcement and penalties: Significant penalties are in place for non-compliance, deterring unethical practices.

- Potential for fostering trust and ethical AI development: By prioritizing ethical considerations and transparency, the Act aims to build public trust in AI technologies.

The Transatlantic AI Divide: Implications and Challenges

The differing approaches to AI regulation between the EU and the US have created a significant Transatlantic AI Divide, posing several challenges:

- Potential for fragmentation of the global AI market: Divergent regulations may lead to the creation of separate AI markets, hindering cross-border collaboration and competition.

- Challenges for transatlantic data flows and collaboration: Differing data privacy regulations can create obstacles for the seamless transfer of data across the Atlantic.

- Need for harmonization of AI standards and regulations: International cooperation is crucial to establish common standards that promote both innovation and ethical AI development.

- Potential impact on competitiveness and innovation: The regulatory divergence could impact the competitiveness of companies on both sides of the Atlantic.

- Opportunities for bridging the divide through international dialogue and cooperation: Collaboration is essential to establishing globally accepted standards and preventing a fragmented AI landscape.

Conclusion:

The diverging approaches to AI regulation between the Trump administration and the European Union highlight a significant Transatlantic AI Divide. While the Trump administration prioritized deregulation to stimulate innovation, the EU favored a risk-based approach prioritizing ethics and data privacy. This divergence presents both challenges and opportunities. Bridging this gap requires international collaboration to establish common standards and address shared concerns. Understanding the intricacies of the Transatlantic AI Divide, particularly the contrast between the Trump administration's policies and the European AI Act, is crucial for navigating the future of AI development and ensuring responsible innovation on a global scale. Further research and dialogue are critical to fostering effective cooperation and mitigating potential risks associated with this significant technological and regulatory chasm. The future of AI hinges on successfully navigating this Transatlantic AI Divide.

Featured Posts

-

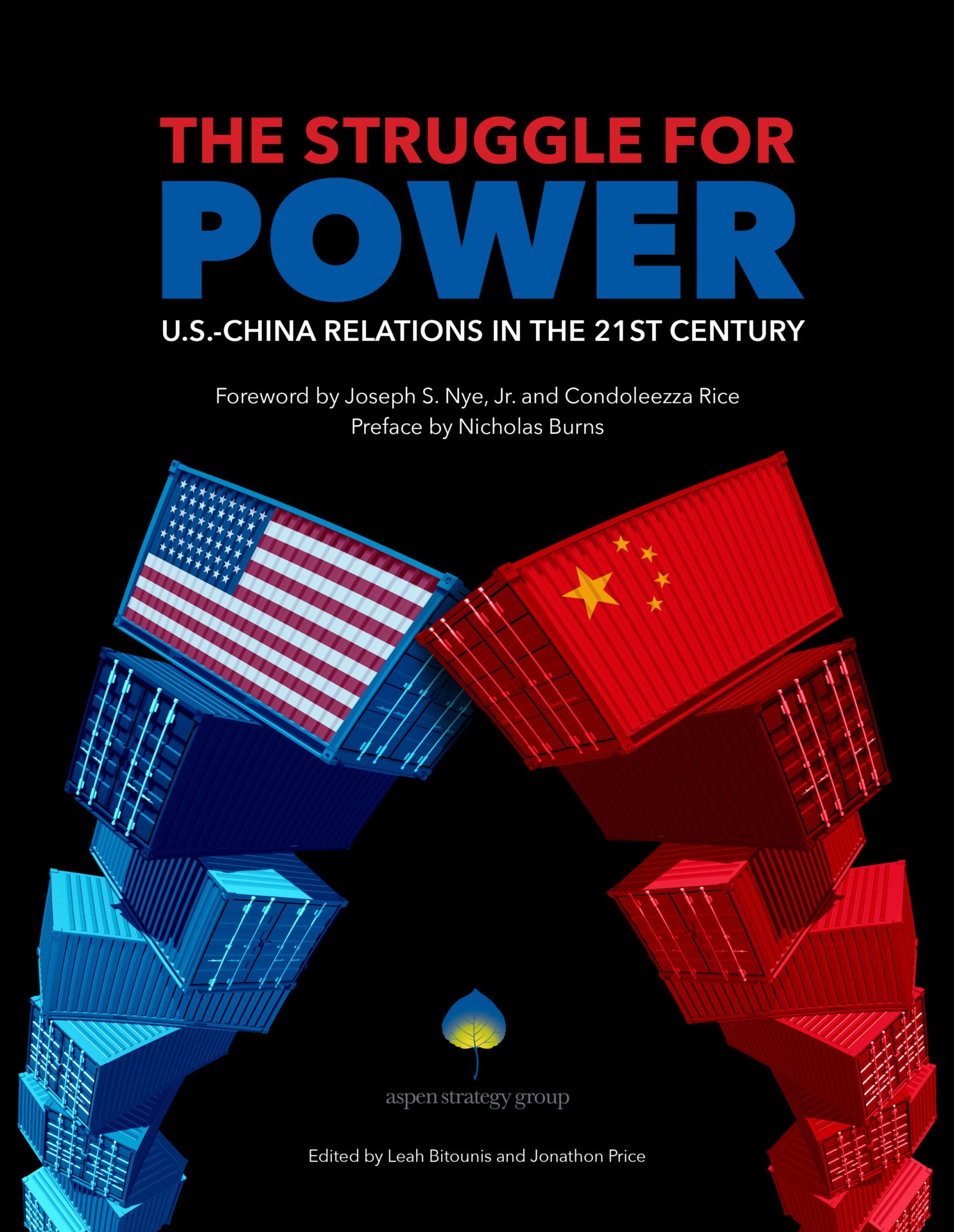

Geopolitical Showdown A Key Military Base And The Us China Power Struggle

Apr 26, 2025

Geopolitical Showdown A Key Military Base And The Us China Power Struggle

Apr 26, 2025 -

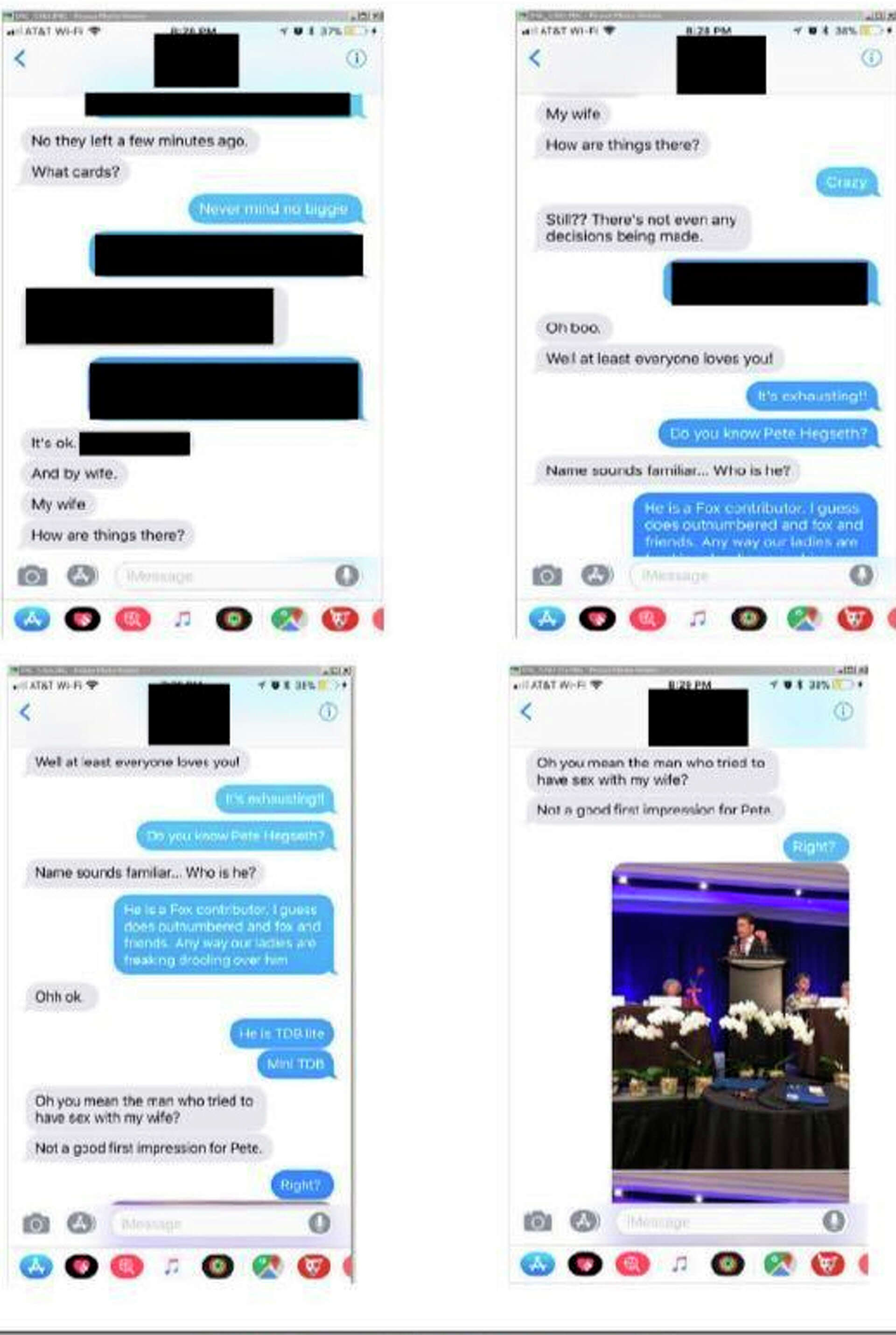

Pentagon Chaos Exclusive Report On Hegseths Reaction To Leaks And Infighting

Apr 26, 2025

Pentagon Chaos Exclusive Report On Hegseths Reaction To Leaks And Infighting

Apr 26, 2025 -

Activision Blizzard Acquisition Ftcs Appeal And The Future Of Gaming

Apr 26, 2025

Activision Blizzard Acquisition Ftcs Appeal And The Future Of Gaming

Apr 26, 2025 -

Actors Join Writers Strike A Complete Shutdown Of Hollywood

Apr 26, 2025

Actors Join Writers Strike A Complete Shutdown Of Hollywood

Apr 26, 2025 -

A Conservative Harvard Professors Prescription For Harvards Future

Apr 26, 2025

A Conservative Harvard Professors Prescription For Harvards Future

Apr 26, 2025