Turning Trash To Treasure: An AI-Powered Podcast From Scatological Documents

Table of Contents

Data Acquisition and Cleaning: The Foundation of the AI-Powered Podcast

Creating an AI-powered podcast from scatological documents begins with a solid foundation: acquiring and meticulously cleaning the data. This crucial initial stage directly impacts the quality and accuracy of the final product.

Sourcing Scatological Documents:

Gathering relevant data presents unique challenges. Sources include:

- Archives and Libraries: These institutions house vast collections of historical documents, many of which may contain valuable, yet overlooked, information. Access often requires navigating complex procedures and obtaining necessary permissions.

- Online Repositories: Digital archives and online databases offer convenient access to a wealth of digitized documents. However, verifying authenticity and ensuring data quality remains crucial.

- Private Collections: Some scatological documents may be held in private collections, requiring negotiation and potentially ethical considerations.

Ethical considerations are paramount. Data privacy concerns must be addressed, particularly when dealing with personal or sensitive information. Careful adherence to copyright laws and obtaining necessary permissions are essential when using scatological documents for research and podcast creation. Keyword integration: scatological document analysis, data acquisition for AI, historical document processing.

Data Preprocessing and Cleaning:

Raw data requires significant preprocessing before AI analysis. This stage involves:

- Data Cleaning: Removing irrelevant characters, correcting typos, and handling inconsistencies in formatting and language.

- Handling Inconsistencies: Standardizing date formats, names, and other data elements is critical for accurate analysis.

- Dealing with Missing Data: Employing techniques like imputation or removal to manage incomplete datasets. Techniques like data normalization are crucial.

The quality of the cleaned data directly influences the accuracy and reliability of the AI's insights. Keyword integration: AI data cleaning, natural language processing (NLP), text mining.

AI-Driven Analysis and Interpretation of Scatological Documents

Once the data is clean, AI algorithms can extract meaningful insights.

The Role of Natural Language Processing (NLP):

NLP algorithms are instrumental in analyzing the textual content of scatological documents. Techniques include:

- Sentiment Analysis: Determining the overall emotional tone expressed in the documents. This allows us to gauge public opinion on specific historical events or social issues.

- Topic Modeling: Identifying recurring themes and topics within the corpus of documents. This enables the podcast to focus on key narratives and perspectives.

- Named Entity Recognition (NER): Extracting key individuals, locations, and organizations mentioned in the documents. This adds context and depth to the storytelling.

These NLP techniques help uncover hidden patterns and relationships within the data that human researchers might miss. Keyword integration: NLP for historical research, AI-powered text analysis, sentiment analysis of historical documents.

Machine Learning for Pattern Recognition:

Machine learning algorithms play a vital role in identifying trends and patterns within the data:

- Clustering Algorithms: Grouping similar documents together based on shared themes or characteristics. This helps to create structured and coherent podcast episodes.

- Predictive Modeling: Using historical data to predict future trends or outcomes (within the specific context of the historical data).

- Time Series Analysis: Studying patterns and trends in the data across different time periods, offering insights into how attitudes and societal dynamics have changed.

These models aid in creating a compelling narrative arc for the podcast, highlighting key discoveries and insights. Keyword integration: machine learning for podcast creation, pattern recognition in text data, AI-driven storytelling.

Transforming Data into a Compelling Podcast Narrative

The final stage involves translating the AI-generated insights into a captivating podcast.

Crafting the Podcast Episodes:

The raw data analysis is transformed into engaging podcast content through:

- Episode Structuring: Organizing the information into logical and coherent segments.

- Narrative Style: Selecting a narrative approach that best suits the content and target audience (e.g., documentary style, interview format, narrative storytelling).

- Sound Design: Incorporating sound effects and music to enhance the listening experience.

Careful crafting ensures the podcast is both informative and entertaining. Keyword integration: podcast production workflow, AI-assisted storytelling, data visualization for podcasting.

Ensuring Accuracy and Ethical Considerations:

Maintaining accuracy and ethical standards is vital:

- Fact-Checking: Verifying all information presented in the podcast to ensure historical accuracy.

- Transparency: Clearly communicating the role of AI in the research and analysis process.

- Ethical Implications: Addressing potential ethical concerns related to using historical data and ensuring responsible representation.

Rigorous fact-checking and ethical considerations are essential for producing a credible and trustworthy podcast. Keyword integration: ethical AI, responsible data use, historical accuracy in podcasting.

Conclusion: Unlocking the Treasure Trove of Scatological Documents with AI

Creating an AI-powered podcast from scatological documents involves a multi-stage process, from meticulous data acquisition and cleaning to sophisticated AI-driven analysis and engaging storytelling. The power of AI lies in its ability to transform seemingly worthless data into valuable insights, uncovering hidden narratives and offering fresh perspectives on the past. This approach delivers historical discoveries, new perspectives, and engaging content, enriching our understanding of history and society.

Ready to uncover the hidden stories within seemingly mundane historical documents? Explore the possibilities of creating your own AI-powered podcast from scatological documents, or similar datasets, today!

Featured Posts

-

Who Will Be The Next Pope Top 10 Cardinal Contenders

Apr 23, 2025

Who Will Be The Next Pope Top 10 Cardinal Contenders

Apr 23, 2025 -

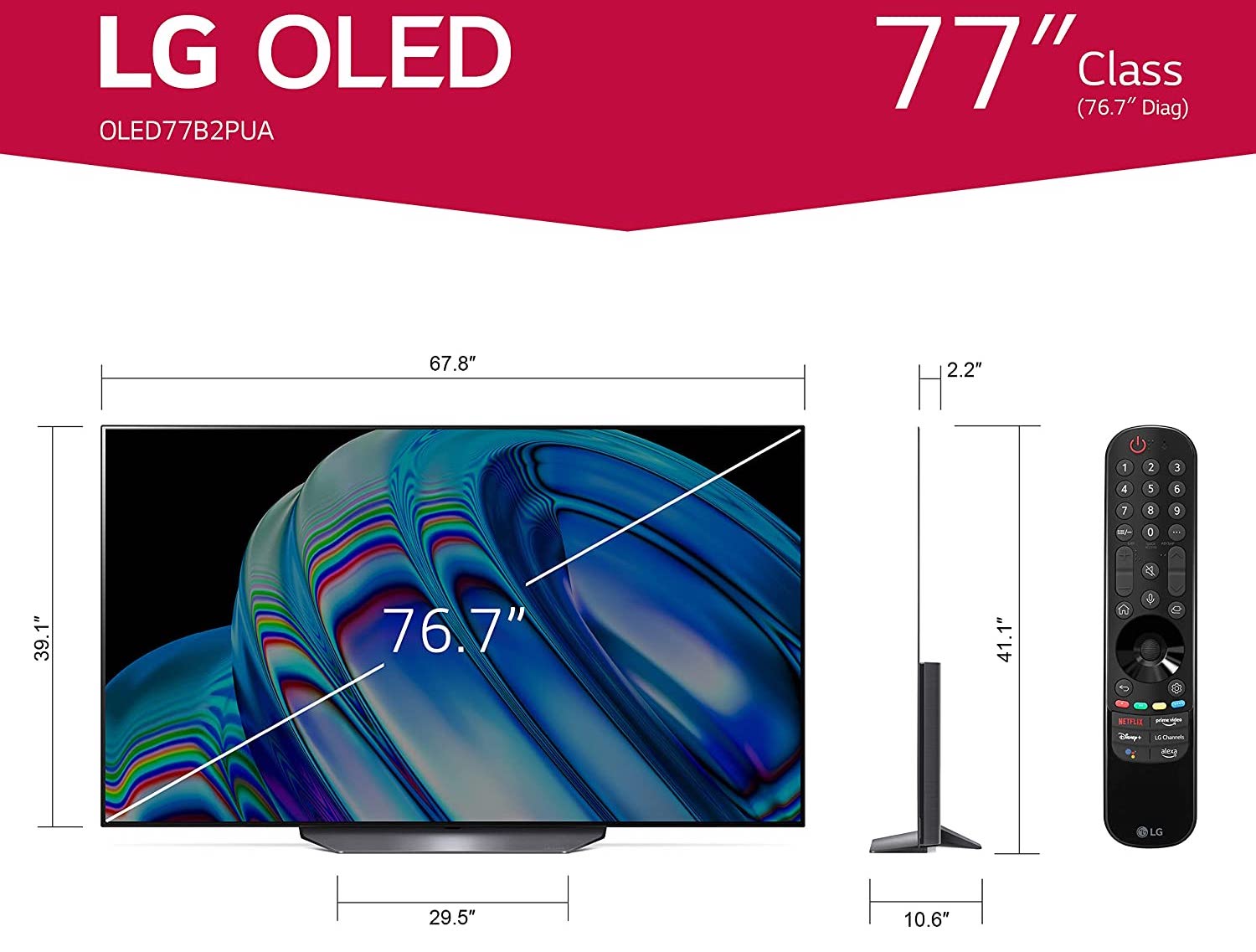

77 Inch Lg C3 Oled Tv Is It Worth The Price

Apr 23, 2025

77 Inch Lg C3 Oled Tv Is It Worth The Price

Apr 23, 2025 -

The Post Roe Landscape Examining The Role Of Over The Counter Birth Control

Apr 23, 2025

The Post Roe Landscape Examining The Role Of Over The Counter Birth Control

Apr 23, 2025 -

Tigers Vs Brewers Detroit Suffers 5 1 Defeat Second Series Loss

Apr 23, 2025

Tigers Vs Brewers Detroit Suffers 5 1 Defeat Second Series Loss

Apr 23, 2025 -

The Science Behind Shota Imanagas Unhittable Splitter A Statistical Analysis

Apr 23, 2025

The Science Behind Shota Imanagas Unhittable Splitter A Statistical Analysis

Apr 23, 2025